Overview

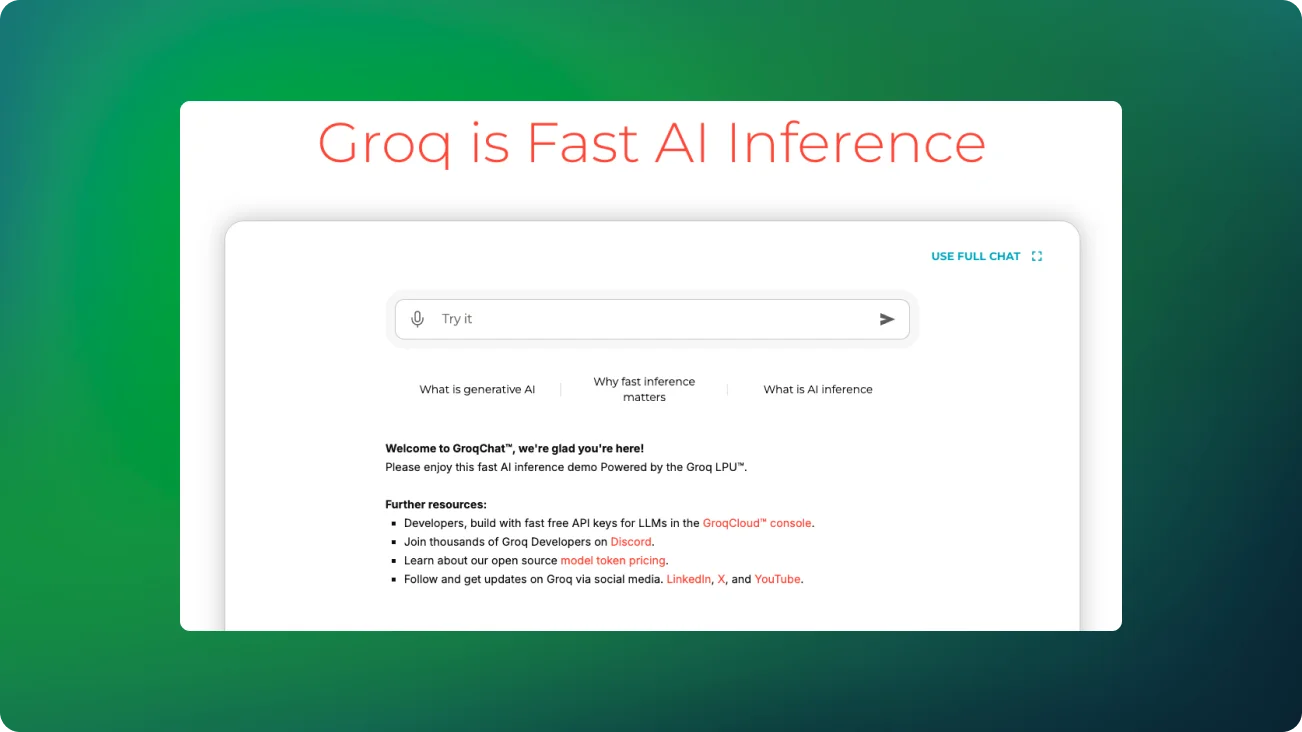

Groq is a platform focused on AI computing, offering ultra-fast inference for large language models and generative AI solutions. The company delivers advanced hardware and cloud-based infrastructure to enhance AI workload performance with minimal delay.

Key Features

- Proprietary LPU (Language Processing Unit) design

- API endpoints that are compatible with OpenAI

- Inference speeds measured in sub-seconds

- Compatibility with a variety of open-source models (e.g., Llama, Mixtral, Gemma)

- GroqCloud™ platform tailored for developers

- Robust AI computing solutions suited for enterprise needs

Use Cases

- Applications in generative AI

- Real-time AI-powered conversational interfaces

- Inference for large language models

- Research and innovation in AI development

- Deployment of AI solutions at an enterprise level

- Enhancing machine learning model performance

Technical Specifications

- Inference with minimal latency

- Efficient computational performance

- Supports a variety of model architectures

- Options for both cloud and on-site deployment

- Easy-to-integrate API for developers

- Scalable infrastructure for growing needs